Nvidia researchers have unveiled what is claimed to be a first-of-its-kind deep learning artificial intelligence (AI) capable of repeating the actions of a human through a robot interface following a single demonstration - and without having to be told how to do so.

Part of Nvidia's push to make its graphics processors the go-to accelerators for all manner of deep-learning AI tasks, the researchers - led by Stan Birchfield and Jonathan Tremblay - have been hard at work developing new tasks for the Titan X graphics cards to chug through. Over the weekend the fruits of their labour were exposed at the International Conference on Robotics and Automation (ICRA) in Brisbane, Australia: a deep-learning system capable of repeating the actions of a human without being directly told how to do so.

'For robots to perform useful tasks in real-world settings, it must be easy to communicate the task to the robot; this includes both the desired result and any hints as to the best means to achieve that result, the research team explain in a blog post aimed at developers. 'With demonstrations, a user can communicate a task to the robot and provide clues as to how to best perform the task.'

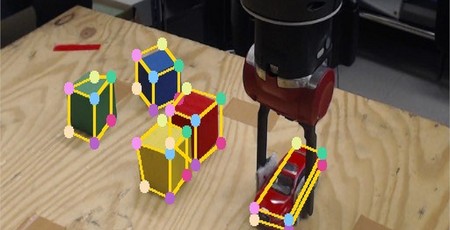

The idea is simple: The deep-learning system watches a human perform a task once through a built-in camera, then generates a human-readable version of its interpretation of events - the latter handy to check it hasn't got the wrong end of the stick. If its description of the steps required to repeat the task are acceptable, the resulting algorithm is then executed on a robot to reproduce the event - completing the same task without ever being explicitly told hold to do so.

In a video demonstration, a researcher activates the system by stacking cubes in a particular order. Following the demonstration, the deep-learning system - which is able to train itself on synthetic data, rather than requiring excessive amounts of real-world data - creates a program through inference and repeats the stacking task.

The team's paper, Synthetically Trained Neural Networks for Learning Human-Readable Plans from Real-World Demonstrations, is available via Arxiv now.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.