Nvidia's efforts to convince developers of the benefits to implementing features specific to its new Turing graphics processing architecture, as found in the GeForce and Quadro RTX families of graphics cards, continues with the promise of improved virtual reality (VR) rendering performance via Texture Space Shading (TSS).

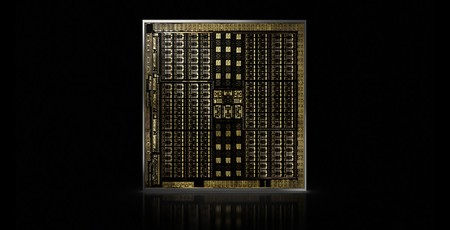

First launched in the workstation-centric Quadro RTX family, where dedicated processing cores for ray tracing acceleration and artificial intelligence workloads speed up various professional tasks, then hit the consumer market in the GeForce RTX 2000 family. It's here, though, that Nvidia is having a few struggles: The shiny new hardware offers a range of improvements, from hybrid real-time ray tracing to accelerated high-quality antialiasing, but only to games which choose to specifically implement the features - and with ray tracing, in particular, bringing a serious performance impact coupled with its delayed availability via Microsoft's DirectX Ray-Tracing application programming interface (API), it's something of a battle.

It's in this space that Nvidia developers Henry Moreton and Nick Stam have published a look at another Turing-specific feature the company is hoping to convince game developers to adopt: Texture Space Shading (TSS). 'Using TSS, a developer can simultaneously improve quality and performance by (re)using shading computations done in a decoupled shading space,' the pair explain in a post on the Nvidia Developer Blog. 'Developers can use TSS to exploit both spatial and temporal rendering redundancy. By decoupling shading from the screen-space pixel grid, TSS can achieve a high-level of frame-to-frame stability, because shading locations do not move between one frame and the next. This temporal stability is important to applications like VR that require greatly improved image quality, free of aliasing artefacts and temporal shimmer.'

That latter feature is where Nvidia is looking to focus its efforts: Virtual reality, by its very nature, involves rendering the same scene twice from two different positions at a rate of at least 90 frames per second - though this framerate can be reduced through artificial frame generation techniques. The two rendered scenes are mostly identical, differing only in the angle from which they are viewed in order to produce the stereoscopic effect that makes things appear three dimensional - and using TSS, the company's developers claim, it's possible to render one view then use it as the basis for the second view, rendering only those objects that were obscured in the first view and dramatically reducing the computational power needed.

TSS isn't exclusive to virtual reality, though: The developers explain that TSS is capable of reducing the performance impact of low-frequency effects like fog, storing and recalling the output of any shader type, and even the output of general computation - potentially speeding up other GPU-accelerated tasks, including physics simulations.

More detail on TSS, which is not yet supported in any commercial games and which is exclusive to graphics cards based on a Turing-family GPU, can be found on the blog post.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.