We've just witnessed the last days of large, single chip GPUs

March 31, 2010 | 11:15

Companies: #tsmc

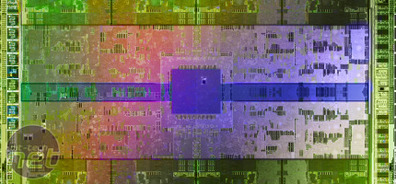

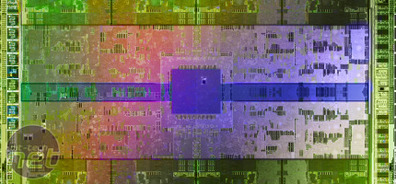

Just as with the 65nm manufacturing process used for GT200, I'm certain Nvidia overestimated what the 40nm node would offer when it first designed Fermi and that this miscalculation has played a huge part in the fact that the GeForce GTX 480 is so hot and uses so much power.

We know each major architecture change for GPU development takes at least a few years to mash out, so fabless companies such as Nvidia need to guess where fabrication partners - TSMC in this case - will be.

As it stands, TSMC has had more than a rough year with its 40nm node and there's been considerable stress for both ATI and Nvidia - however, to ATIs advantage, it started on 40nm with the Radeon HD 4770. It's clearly not forgotten the lesson came at the expense of the HD 2900 XT, which first arrived on a massive 80nm die, before being respun into TSMCs then upcoming 55nm node at a more digestible price.

Luckily for Nvidia the GTX 480 isn't quite up to the HD 2900 XT par of failures; at least it's faster than the previous generation and, negating the lateness and practical engineering issues, the die size and power use are truly massive.

The thing is, TSMC hasn't yet demonstrated how commercially viable its next fabrication node (likely 28nm) is, so while we fully expect Nvidia to 'pull an ATI HD 3000 series' in six months time and re-do Fermi with a smaller process, resulting in a much more power efficient GPU, TSMC's troubles - and the fact Nvidia is desperate for a new process - means Nvidia is likely looking to Global Foundries, the manufacturing firm spun off from AMD last year.

[break]

The heat and power consumption of the GTX 470 and 480 mean its highly unlikely we'll see a dual GPU product any time soon and, as much as the nay-sayers claim we cannot compare the "dual GPU" Radeon HD 5970 to the "single GPU" GTX 480, the fact is that these are all products competing for the titles of "fastest graphics card" - and that's a title which isn't leaving AMD any time soon.

While there are many reasons to be pessimistic about Fermi, one lesson Nvidia has learnt is to design Fermi with more modularity in mind, so we won’t have a repeat of the GT200 series, where Nvidia struggled to produce any derivatives for the all-important mainstream market.

We will be seeing more Fermi-based derivatives in the coming months, which Nvidia absolutely needs to nail because ATI has already successfully launched a complete top-to-bottom DirectX 11 range and after a 9 months of cold turkey, 2010 will see which Nvidia partners can take the strain and come out dancing.

For a few, they might actually be better off for less competition. BFG has already left the European market along with many other smaller partners, and EVGA is set for a resurgence. Things could get interesting.

Looking to the future, I wonder if this will be the very last big GPU we ever see. After two big and hot GPUs, will Nvidia abandon this method of design in favour of the ATI-esque (or should we say, Voodoo-esque) route of multi-chip graphics card design? Dare I suggest, does it even care?

Are Nvidia’s ambitions reallyto now concentrate on CUDA applications in the HPC market where big money is to be made? It’s certainly a huge growth area compared to the relatively mature PC gaming market.

Like so many of us, I never want to see PC gaming die, but in my opinion the days of multi-billion transistor single chip graphics cards are practically over.

We know each major architecture change for GPU development takes at least a few years to mash out, so fabless companies such as Nvidia need to guess where fabrication partners - TSMC in this case - will be.

As it stands, TSMC has had more than a rough year with its 40nm node and there's been considerable stress for both ATI and Nvidia - however, to ATIs advantage, it started on 40nm with the Radeon HD 4770. It's clearly not forgotten the lesson came at the expense of the HD 2900 XT, which first arrived on a massive 80nm die, before being respun into TSMCs then upcoming 55nm node at a more digestible price.

Luckily for Nvidia the GTX 480 isn't quite up to the HD 2900 XT par of failures; at least it's faster than the previous generation and, negating the lateness and practical engineering issues, the die size and power use are truly massive.

The thing is, TSMC hasn't yet demonstrated how commercially viable its next fabrication node (likely 28nm) is, so while we fully expect Nvidia to 'pull an ATI HD 3000 series' in six months time and re-do Fermi with a smaller process, resulting in a much more power efficient GPU, TSMC's troubles - and the fact Nvidia is desperate for a new process - means Nvidia is likely looking to Global Foundries, the manufacturing firm spun off from AMD last year.

[break]

The heat and power consumption of the GTX 470 and 480 mean its highly unlikely we'll see a dual GPU product any time soon and, as much as the nay-sayers claim we cannot compare the "dual GPU" Radeon HD 5970 to the "single GPU" GTX 480, the fact is that these are all products competing for the titles of "fastest graphics card" - and that's a title which isn't leaving AMD any time soon.

While there are many reasons to be pessimistic about Fermi, one lesson Nvidia has learnt is to design Fermi with more modularity in mind, so we won’t have a repeat of the GT200 series, where Nvidia struggled to produce any derivatives for the all-important mainstream market.

We will be seeing more Fermi-based derivatives in the coming months, which Nvidia absolutely needs to nail because ATI has already successfully launched a complete top-to-bottom DirectX 11 range and after a 9 months of cold turkey, 2010 will see which Nvidia partners can take the strain and come out dancing.

For a few, they might actually be better off for less competition. BFG has already left the European market along with many other smaller partners, and EVGA is set for a resurgence. Things could get interesting.

Looking to the future, I wonder if this will be the very last big GPU we ever see. After two big and hot GPUs, will Nvidia abandon this method of design in favour of the ATI-esque (or should we say, Voodoo-esque) route of multi-chip graphics card design? Dare I suggest, does it even care?

Are Nvidia’s ambitions reallyto now concentrate on CUDA applications in the HPC market where big money is to be made? It’s certainly a huge growth area compared to the relatively mature PC gaming market.

Like so many of us, I never want to see PC gaming die, but in my opinion the days of multi-billion transistor single chip graphics cards are practically over.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.